AWS ParallelCluster API

In Part I you created an HPC system in the cloud using the AWS ParallelCluster CLI and submitted a test MPI job. In Part II, you will run through the following steps:

- Use the AWS ParallelCluster API to manage your HPC cluster programmatically with a Python interface.

- Interact with the API using a web interface that will call the API on your behalf.

About the AWS ParallelCluster API

AWS ParallelCluster API is a serverless application that once deployed to your AWS account will enable programmatic access to AWS ParallelCluster features via an API.

AWS ParallelCluster API is distributed as a self-contained AWS CloudFormation template consisting of an Amazon API Gateway endpoint which exposes AWS ParallelCluster features, and an AWS Lambda function which takes care of executing the invoked features.

Why use the AWS ParallelCluster API

The ability to manage HPC clusters through an API provides several advantages over the use of the CLI:

- The API makes it easier to create even-driven and scripted workflows.

- As part of these workflows, you can consider your HPC clusters as ephemeral. Which means that they will only exist for the duration of the computational stage were they are needed and terminated once your computations is completed.

- It enables you to abstract the cluster management piece and create clusters from templates that can be customized for specific computational stages.

Using this API you can have an AWS EventBridge rule that checks if files are stored in a particular Amazon S3 bucket, trigger the creation of a cluster through an AWS Lambda function, process the file, then trigger the termination of the cluster once done.

AWS ParallelCluster API Architecture

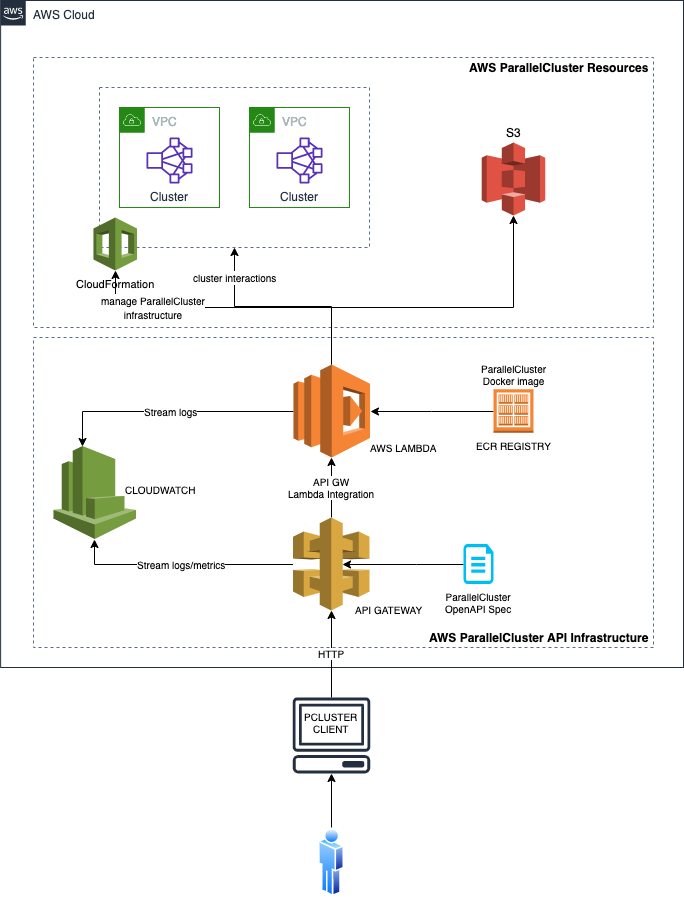

A high level of AWS ParallelCluster API is shown in the image below:

AWS ParallelCluster API provides you with the ability to create and manage cluster programmatically. You can call this API using an AWS Lambda function triggered when a new file is stored in an Amazon S3 bucket or to trigger the creation of a dedicated cluster to process a stream of jobs then shutdown.